Upcoming Events

🌁 SF Bay Area

Tue, Mar 25th: 🧠 GenAI Collective Marin 🧠 Lunch & Learn

Wed, Mar 26th: SF Demo Night 🚀

Sat, Mar 29th: 🧠 GenAI Collective Marin 🧠 AI Investors Roundtable

🗓️ Hungry for even more AI events? Check out SF IRL or Cerebral Valley’s spreadsheet!

🗽 New York

Fri, Mar 28th: 🧠 GenAI Collective NYC 🧠 x Permanence x AI Review Presents: March Technical Roundtable

Thu, Apr 3rd: GenAI Collective @ Deep Tech Week NYC: Software’s Agentic Future

Thu, Apr 10th: NYC Demo Night 🚀

🏛️ DC

Thu, Mar 27th: AI Soirée: Dystopia or Utopia?

Thu, Apr 3rd: The Perfect Happy Hour: GenAI Collective x Prefect

🌴 Miami

Tue, Mar 25th: 🧠 GenAI Collective Miami 🧠 AI Agent Hack Night

🇨🇦 Toronto

🇵🇱 Warsaw

Tue, Apr 8th: 🧠 GenAI Collective Poland 🧠 Kickoff Event

AI News Roundup

Guest Article by AJ Green

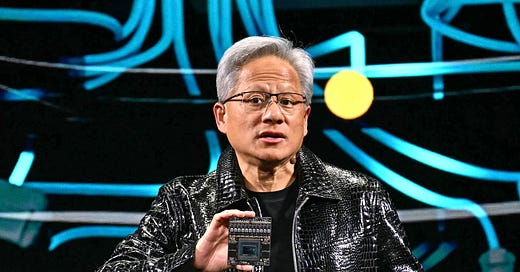

Welcome to the AI Super Bowl, GTC 2025

This week at GTC 2025, Jensen Huang took the stage in trademark leather jacket flair and made one thing very clear: Nvidia isn’t just building GPUs—it’s architecting the next era of end-to-end intelligence infrastructure, a future that goes beyond software apps to physical systems. Jensen balked at the fact business is slowing down and focused on the movement from training to inference and Physical AI as the two primary growth pillars for the ecosystem. With a sweeping two-hour keynote dubbed “AI’s Super Bowl,” Huang laid out a master plan spanning silicon, robotics, simulation, and self-driving vehicles—all designed to secure Nvidia’s place at the center of AI’s next chapter.

Highlights

Nvidia revealed its long-term roadmap with three powerhouse GPUs: Blackwell Ultra in late 2025, Vera Rubin (2026) and Feynman (2028) — each promising exponential leaps in performance and energy efficiency.

Jensen made clear that the next frontier of AI lies in empowering physical systems, doubling down on synthetic data generation and their new GPU Rubin as the key building blocks to unlocking the ecosystem.

“Scaling is nowhere near slowing down,” said Jensen Huang, noting that today’s AI compute demands are already 100x higher than estimates from just a year ago.

NVIDIA also debuted Isaac GR00T N1, the first open humanoid robot foundation model, alongside a rich dataset designed to accelerate robotic learning in the physical world.

With the new DGX Spark and DGX Station, NVIDIA is bringing data center-grade AI computing to personal workstations, with Huang calling it “the computer for the AI age.”

A new robotics physics engine, Newton, was unveiled in collaboration with Google DeepMind and Disney—demonstrated on stage with ‘Blue,’ a Star Wars-style robot.

Nvidia announced a fresh partnership with GM to develop the automaker’s first fleet of self-driving vehicles.

Why it matters

Nvidia isn’t just iterating on silicon—it’s vertically integrating the future of intelligence and doubling down on the ecosystem for Physical AI. From core compute and inference to software frameworks and simulation to real-world embodiment, GTC 2025 showcased a company building an AI monopoly by design. The message is clear: whoever controls the AI stack controls the future—and Nvidia intends to own it all by reimagining what’s possible in AI’s next frontier: inference and robotics.

OpenAI Doubles Down on DIY Agents

OpenAI has introduced innovative tools that empower businesses to create AI agents—enabling custom bots to perform tasks such as web browsing and file handling. As OpenAI continues to execute on their vision of moving from a foundational model company to a product company, AI agents are clearly the key to pushing into the application layer. By quickly expanding on their Swarm SDK and adding computer use functionality, this could mark a significant step toward lessening the friction of integrating autonomous AI assistants into more complex enterprise workflows.

Highlights

The newly launched Responses API integrates web search, file scanning, and computer interface interactions, replacing the older Assistants API, which is set to phase out by 2026.

It enables businesses to build AI agents using the same technology behind OpenAI's Operator feature, featuring built-in tools for web searches and system navigation.

An open-source Agents SDK provides developers with orchestration capabilities for single and multi-agent systems, along with built-in safety measures and monitoring functions.

Early adopters include Stripe, which designed an agent for invoicing, and Box, which developed AI tools to navigate enterprise documents.

Why it matters

Similar to the DeepSeek moment, China's Manus has forced OpenAI's hand yet again to drop new features and tools. We're not even 90 days into 2025 yet and we've seen the AI agent ecosystem make tremendous progress so far this year. While many agent-based solutions have generated more speculation than results, OpenAI’s expansion of customization tools might bridge the gap between concept and practical enterprise adoption.

Google Supercharges Gemini

Over the past week, Google unveiled a sweeping set of upgrades across its Gemini ecosystem, signaling a full-stack push to dominate both enterprise AI agent development and collaboration. From the launch of Gemma 3—a set of ultra-efficient open models—to new multimodal tools, collaborative Canvas workspaces, and deep personalization via Search and Google app integration, Gemini is rapidly becoming an ambient AI operating system embedded into their expansive ecosystem of enterprise collaboration tools.

Highlights

Introducing Gemma-3: A new family of ultra-efficient models (1B–27B) that run on a single GPU or TPU. The 27B model rivals giants like Llama 405B—while supporting a 128K context window and 140+ languages.

Gemini 2.0 Flash adds native image editing, audio generation, and real-time multimodal input via video, audio, and images—all in one seamless interface.

Canvas (Google Edition?): Collaborative workspace for writing, coding, and design. Users can prototype web apps, draft docs, or generate code—with real-time feedback and visual previews.

Gemini now connects to Search, Calendar, Photos, and more, enabling context-aware responses like restaurant recs or custom travel itineraries—based on your actual digital history (opt-in only).

Gemini will replace Google Assistant on most Android phones this year. Deep Research is now free for all users, and 1M-token context windows are live for Gemini Advanced.

Why It Matters

Google is quietly—and rapidly—rebuilding the concept of what an AI assistant can be and looking to draw on its existing enterprise collaboration ecosystem to transform how we work. With Gemma 3, developers get powerful, open-source models that are small enough to run locally. With Gemini 2.0 Flash, users gain real-time image, audio, and multimodal workflows. And with Canvas and personalization tools, Gemini becomes deeply contextual—understanding not just what you say, but what you need across your entire digital life.

Events Spotlight

🇮🇳 GenAI Collective Lands in India! 🌏

What a week. In just four days, we launched four new GenAI Collective chapters across India—Delhi, Bengaluru, Hyderabad, and Mumbai—igniting a new era for AI communities in one of the world’s most important tech landscapes.

📍 Delhi – Tuesday

We kicked things off in the capital with an energetic crowd ready to shape the future. From students to startup founders, Delhi showed up with curiosity and conviction.

📍 Bengaluru – Wednesday

The city’s entrepreneurial energy was electric. Every conversation crackled with ambition—especially around building real-world AI applications!

📍 Hyderabad – Thursday

Hosted at T-Hub, Hyderabad brought unmatched enthusiasm. There’s real momentum building here—this city is ready to make global waves in AI!

📍 Mumbai – Friday

We closed the week on a high note. A diverse and engaged crowd, rich conversation, and yes—amazing food. Mumbai’s international energy was the perfect finale!

Join the Community!

💬 Slack: GenAI Collective

𝕏 Twitter / X: @GenAICollective

🧑💼 LinkedIn: The GenAI Collective

📸 Instagram: @GenAICollective

We are a volunteer, non-profit organization – all proceeds solely fund future efforts for the benefit of this incredible community!

Join the GenAI Creative Studio!

The GenAI Collective is on the lookout for creative pros who are passionate about AI and storytelling. We need sharp, innovative minds to help shape our brand across social media, newsletters, PR, podcasts, and beyond. If you're ready to craft compelling content at the intersection of tech and creativity, let’s talk.

We are currently looking for:

Creative Director

Graphic Designers

Videographers/Editors

Photographers

Producers

Animators

Marketing Copywriters

While these roles are volunteer-based, the perks are big. You'll get exclusive access to all GenAI Collective events, connect with a cutting-edge AI community, and collaborate with a fast-growing team. Plus, every project you contribute to comes with a shout-out to our highly engaged audience of AI pros and industry leaders—giving your work the visibility it deserves.

If you're a creative professional eager to join a team of AI experts dedicated to building global AI communities and ready to have fun, please click the button below!

About AJ Green

AJ is the Founder & CEO of AI Advantage Agency, WCYP Chairman, and Chapter Lead for GenAI Collective Portland. Former athlete turned tech entrepreneur, AJ is a builder and superconnector on a mission to make AI the great equalizer—helping startups scale and turning disruption into opportunity.

About Eric Fett

Eric leads the development of the newsletter and online presence. He is currently an investor at NGP Capital where he focuses on Series A/B investments across enterprise AI, cyber, and industrial tech. He’s passionate about working with early-stage visionaries on their quest to create a better future. When not working, you can find him on a soccer field or at a sushi bar! 🍣