🗯️ The Byte: Can A Machine Read Your Mind? [Exclusive]

February 10, 2026

🎁 Welcome to The Byte 2.0!

The Byte is The AI Collective’s leading publication for thought leaders sharing about the incredible discoveries they’ve made and projects they are working on.

In this week’s edition of The Byte, Carol Zhu, CEO and Co-Founder of DecodeNetwork.AI, explores why understanding implicit intent remains one of AI’s hardest problems. Drawing on experience building large-scale intent prediction systems, she examines the architectural tradeoffs behind today’s models, the limits of compression, pattern matching, and what becomes possible when multimodal signals and persistent memory converge.

For more information or how to get in touch with The Byte editorial team, send us a message at marketing@aicollective.com.

~ Josh Evans, Managing Editor

Can a Machine Read Your Mind?

The Challenge of Implicit Intent

Understanding implicit intent, whether that be unspoken or indirectly expressed intentions, is a significant challenge for AI. Humans develop this ability through self-awareness and emotional maturity, often described as “theory of mind.” This capacity to empathize, relate, and go beyond what is made explicit makes human connections profound.

The future of AI may hold this possibility.

Reflection prompts us to consider the question: Will our AI assistants one day become the “bosom friend” who understands us better than anyone else?

There are many systemic failures that get in the way of this becoming a reality.

In multi-turn conversations, errors compound into a “snowball effect” where early misunderstandings cascade into progressively worse responses. When someone says “What should I do next?” mid-conversation, models give generic answers instead of grounding in what came before. When someone trails off with “I guess that’s it then…” models miss the resignation entirely.

Even with 1M+ token context windows, performance degrades on the tasks that matter most: tracking implicit threads across long conversations, detecting emotional subtext, and knowing when to ask rather than guess.

This matters more now because AI is going agentic. AI is going beyond simply answering questions and taking actions, whether that be booking travel, managing projects, or coordinating workflows. An agent that misunderstands implicit intent takes a wrong action.

How Models Try to Capture Intent

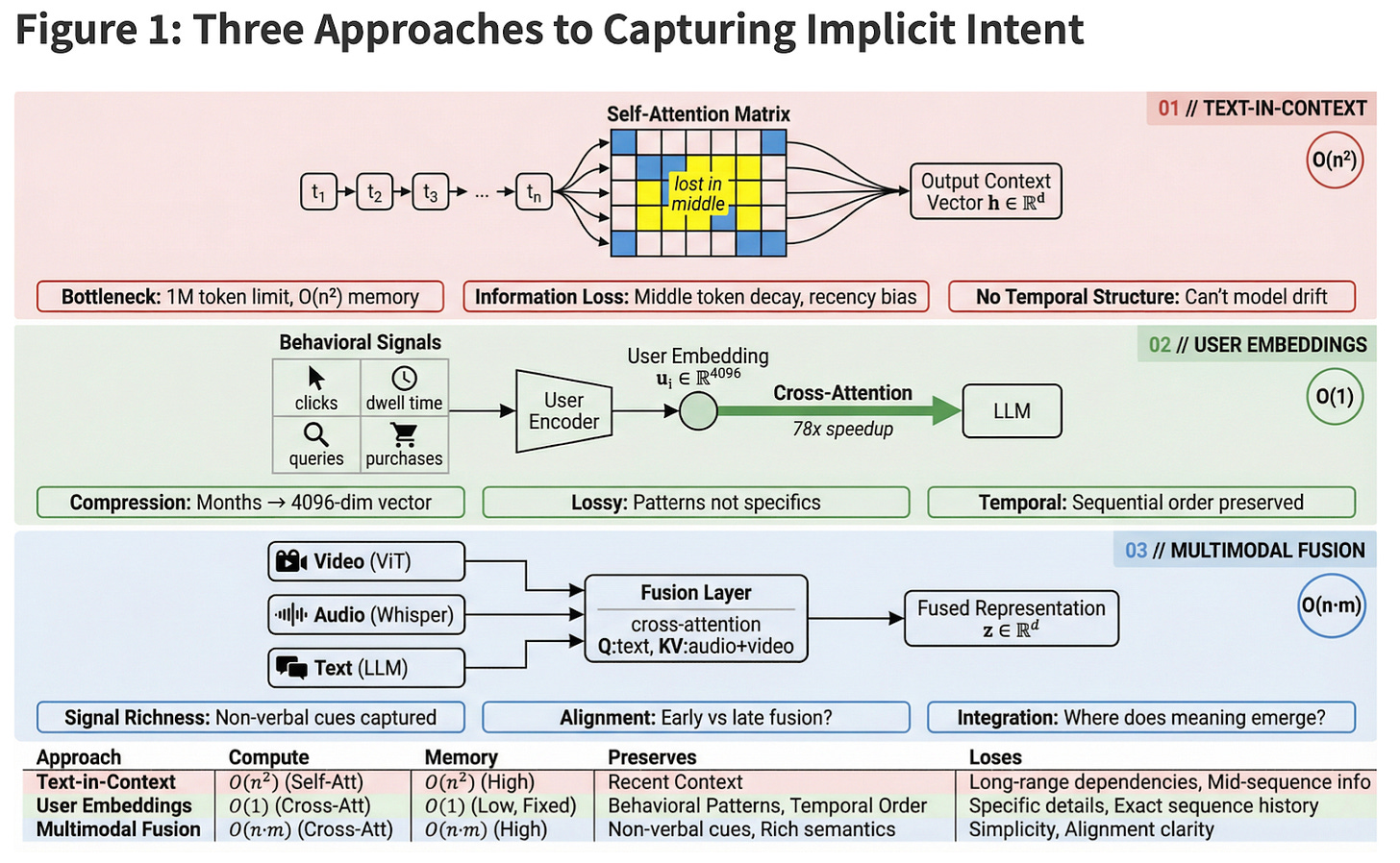

Three architectural approaches dominate current attempts to give models access to implicit user signals. Each makes a different tradeoff between richness and scalability.

Text-in-context is the naive baseline: stuff conversation history into the prompt. It’s what most chatbots do. The limits to this approach are obvious, as even 1M token windows fill up fast, and raw transcripts are noisy. More subtly, there’s no temporal structure. The model sees a bag of messages rather than a trajectory of evolving needs.

User embeddings compress behavioral signals (e.g., clicks, queries, dwell time, past interactions) into dense vectors that capture patterns across sessions. Google’s USER-LLM framework achieves 78x computational savings over raw history while preserving predictive power. The tradeoff is that these embeddings are lossy.

Multimodal fusion aligns text, audio, and visual inputs into a shared representation space. When someone says “I guess that works” while frowning with a hesitant tone, each modality carries part of the meaning. The promise is that jointly encoding these signals lets the model capture what no single channel reveals. The challenge is whether “meaning” lives in the fusion or remains distributed across modality-specific pathways that don’t fully communicate.

The diagram above illustrates the three architectural approaches in technical detail:

Row 1 (Text-in-Context): Raw conversation history tokens flow through a self-attention mechanism where the “lost in the middle” phenomenon causes attention decay for middle tokens. The output is a context vector h ∈Rd. Key limitations include context window constraints, information loss due to attention patterns, and a lack of temporal structure to model preference drift.

Row 2 (User Embeddings): Behavioral signals (clicks, dwell time, queries, purchases) are compressed by a User Encoder (SASRec/Transformer) into a dense embedding (u i ∈R4096), which is then injected via cross-attention with the LLM, achieving 78x computational speedup. The tradeoff is that embeddings are lossy, capturing general patterns but missing specific details, though they do preserve sequential temporal patterns.

Row 3 (Multimodal Fusion): Video (ViT Encoder), Audio (Whisper), and Text (LLM Embed) streams are processed in parallel and merged through a fusion layer with cross-attention, producing a fused representation z ∈Rd. This captures rich non-verbal cues but faces the alignment problem of determining where meaning emerges.

The same pattern is emerging in generation, not just recognition. HPSv2 trained on 798k human preference choices to predict which AI-generated images people will prefer, without humans ever articulating why they prefer them. Models are learning to satisfy implicit aesthetic preferences the same way they are learning to read implicit emotional cues.

The uncomfortable truth is that no current architecture solves the fundamental tension. Compression gains efficiency but loses nuance. Raw history preserves detail but doesn’t scale. Every approach is a bet on which tradeoffs matter less.

Two Theories of the Gap

The optimists point to representational convergence. A provocative line of research suggests that neural networks, regardless of architecture or training data, are converging toward shared representations of reality. Different models trained on different tasks increasingly represent similar concepts in similar ways. If this continues, AI systems might develop a generalized understanding of human behavior that transcends individual training. Intent understanding would emerge naturally from increasingly faithful models of how humans actually think.

The skeptics see something darker. As Benjamin Spiegel argued recently in The Gradient, LLMs aren’t learning world models, they’re learning “bags of heuristics”. The Othello experiments that seemed to show emergent world-modeling? On closer inspection, the models learned prediction rules that work for training data but fail on edge cases. They’re not understanding the game; they’re pattern-matching move sequences.

Applied to intent: when an LLM appears to grasp what you mean, it may be executing sophisticated syntactic pattern-matching that mimics semantic understanding without achieving it. The appearance of empathy without the substance.

Crossing the Threshold

Here’s where things get interesting. What happens when the functional approximation gets good enough?

Persistent memory changes everything. An AI that remembers not just your words but your patterns: what you ask when you’re stressed, what you avoid when you’re overwhelmed, what you actually want when you say “whatever’s fine.” Not a chatbot with a database. Something closer to a friend who’s been paying attention.

Multimodal reading adds depth. A September 2025 study found GPT-4 predicting emotions from video with accuracy matching human evaluators and crucially, the structure of its emotion ratings converged with human patterns. Now combine that with persistent memory: an AI that reads your face, remembers your patterns, and tracks how your feelings evolve across conversations.

Preference prediction extends beyond text. Models are getting good at predicting what we want without understanding why we want it: in images, in music, in interface design. The same “predict implicit preference from behavioral signals” paradigm is spreading across modalities.

An AI assistant that remembers your communication patterns across months, reads emotional subtext from your voice and face, anticipates your needs based on context you never explicitly provided, and generates responses tuned to preferences you never articulated.

When Prediction Feels Like Empathy

I’ve built systems that predict intent from behavioral signals: clicks, purchases, time spent on a page. The models work. Users convert. Metrics improve.

The research trajectory is clear: multimodal inputs, persistent memory, better reasoning, richer benchmarks. Each advance closes the gap between what we say and what machines infer. We will almost certainly build AI that responds to implicit intent better than most humans bother to.

When your AI assistant remembers your preferences, anticipates your needs, and responds to your unspoken frustrations with exactly the right tone, will that feel like a connection? Or will it feel like a very sophisticated mirror, reflecting back what you want to see without anyone on the other side?

From Understanding to Expression: The DecodeNetwork Approach

At DecodeNetwork.AI, we’re building in the gap between machine-readable visual features and human aesthetic response.

Vision Transformers capture what an image contains, composition, color, spatial relationships, style not what it means to someone. Show a model a cat-shaped object covered in elephant skin, and it sees an elephant. Show a human the same image, and they see a cat. No amount of architectural refinement turns a pixel-statistical representation into a psychological one.

We use vision models to extract visual features; that’s the machine’s job, and it does it well. We pair that with something no vision model provides: a psychological framework for interpreting why certain visual patterns resonate with certain people.

We’ve built a psychological architecture across four dimensions: how you channel ambition (DRIVE), negotiate identity (SOUL), access emotional depth (HEART), and inhabit your body (BODY). Each position describes something structural about how a person operates; not a trait-to-preference correlation, but a deep pattern that shapes how someone sees.

The mechanism: a user selects images, pure gut reaction. Each image carries hidden emotional dimensions the user never sees: loneliness, awe, mystery, rebellion, and intimacy. Visual preference bypasses the rational self-presentation that corrupts every language-based personality test.

From these selections, we generate a Soul Portrait, a map of your hidden emotional architecture. We use it to match you with people whose inner landscape resonates with yours, even if their taste looks nothing like yours on the surface. That’s how we find your soulmate.

Neural networks learn statistics. Brains process signals. A machine alone cannot read your soul. But give it the right images and the right framework, and you’ll reveal yourself through what you choose when nothing is being measured. Neural networks learn statistics. Brains process signals. A machine alone cannot read your soul. But give it the right images and the right framework, and you’ll reveal yourself through what you choose when nothing is being measured.

Thanks for reading The Byte!

The Byte is The AI Collective’s insight series highlighting non-obvious AI trends and the people uncovering them, curated by Noah Frank and Josh Evans. Questions or pitches: josh@aicollective.com.

Our Premier Partner: Roam

Roam is the virtual workspace our team relies on to stay connected across time zones. It makes collaboration feel natural with shared spaces, private rooms, and built-in AI tools.

Roam’s focus on human-centered collaboration is why they’re our Premier Partner, supporting our mission to connect the builders and leaders shaping the future of AI.

Experience Roam yourself with a free 14-day trial!

➡️ Before You Go

Partner With Us

Launching a new product or hosting an event? Put your work in front of our global audience of builders, founders, and operators — we feature select products and announcements that offer real value to our readers.

👉 To be featured or sponsor a placement, reach out to our team.

The AI Collective is driven by our team of passionate volunteers. All proceeds fund future programs for the benefit of our members.

Are you subscribed across our platforms? Stay close to the community:

💬 Slack: AI Collective

𝕏 Twitter / X: @_AI_Collective

🧑💼 LinkedIn: The AI Collective

📸 Instagram: @_AI_Collective

About Carol Zhu

Carol Zhu is CEO and Co-Founder of DecodeNetwork.AI. She previously launched products at AWS AI, TikTok, and Credit Karma—building systems that predict what users want, and thinking deeply about what that means. You might call her a deep thinker in all the dubious senses of the term: human nature, meritocratic society, political economy, why people choose what they choose. That last question is what DecodeNetwork is building on.